HR Compliance Guide for 2022 – Forbes

- Published in Uncategorized

With 16.8% CAGR, Document Management System Market Size … – GlobeNewswire

April 25, 2022 08:09 ET | Source: Fortune Business Insights Fortune Business Insights

Pune INDIA

Pune, India, April 25, 2022 (GLOBE NEWSWIRE) — The global document management system market size was USD 5.00 billion in 2021 and reached USD 5.55 billion in 2022. The market is anticipated to reach USD 16.42 billion by 2029, exhibiting a CAGR of 16.8% during the forecast period. The rising demand for paperless government and offices due to the extensive adoption of cloud services is expected to propel the market development. Fortune Business Insights™ provides this information in its report titled “Document Management System Market Growth, 2022-2029.”

A document management system is a solution developed to systematically manage documents and files and simplify data management. The rising demand for paperless government and offices may enhance the market growth. Further, the extensive adoption of cloud-based services may enhance the product adoption. These factors may propel the industry’s growth in the coming years.

Key Industry Development

Request a Sample Copy of the Research Report: https://www.fortunebusinessinsights.com/enquiry/request-sample-pdf/document-management-system-market-106615

Report Scope:

Drivers and Restraints

Robust Demand for Workplace Efficiency to Enhance Market Growth

The incorporation of advanced technology such as artificial intelligence, real-time tracking solutions, and cloud computing solutions is expected to surge the product demand. For example, eGrove Systems Corporation announced an integrated advanced agile document and time tracking project management. This factor increased workplace efficiency by using advanced software solutions. Further, incorporating the software enables companies to manage the workplace environment and achieve their goals. These factors may propel the document management system market growth.

However, increasing data privacy concerns and regulatory compliances may hinder market growth.

Click here to get the short-term and long-term impact of COVID-19 on this Document Management System Market.

Please visit: https://www.fortunebusinessinsights.com/document-management-system-market-106615

Regional Insights

Presence of Major Players to Propel Market Progress in North America

North America is expected to dominate the document management system market share due to the presence of several major players. The market in North America stood at USD 2.25 billion in 2021 and is expected to gain a huge portion of the global market share. Further, the presence of a developed digital infrastructure is expected to boost the industry progress.

In Asia Pacific, the rising adoption of DMS solutions by government, manufacturing, and other sectors is expected to boost the document management system adoption. These factors may propel the market growth.

In Europe, rising investments in digital platforms may boost the adoption of the document management system. Further, rising digital platform investments are expected to boost industry progress.

Segments

By component, the market is segmented into solution and services. As per deployment, it is bifurcated into cloud and on-premises. Based on organization size, it is clubbed into large enterprises, and small and medium enterprises. By industry, it is classified into BFSI, IT and telecommunication, government, manufacturing, retail, healthcare, and others. Regionally, it is classified into North America, Europe, Asia Pacific, Middle East & Africa, and South America.

Competitive Landscape

Players Announce Novel Services to Boost Brand Image

The prominent players operating in the market announce novel services to enhance their sales and boost brand image. For example, Google LLC announced an AI-based Lending DocAI service for the mortgage industry. The AI tool helps several mortgage companies in speeding up their document processing. It helps automate routine document reviews by extracting the data required. It is a civilized document that may enable the company to boost its brand image. Further, companies adopt research and development, mergers, acquisitions, and expansions to boost their annual revenues and global market position.

Quick Buy – Document Management System Market:

https://www.fortunebusinessinsights.com/checkout-page/106615

Report Coverage

The report provides a detailed analysis of the top segments and the latest trends in the market. It comprehensively discusses the driving and restraining factors and the impact of COVID-19 on the market. Additionally, it examines the regional developments and the strategies undertaken by the market’s key players.

COVID-19 Impact

Rising Dependence Upon Digitization to Foster Market Growth

This Document Management System Market is expected to be negatively affected during the COVID-19 pandemic because of the rising dependence on digitization. The alarming spike in COVID-19 cases leads to restrictions on manufacturing and the closure of activities. Companies focus on developing digital infrastructure to continue their activities and enhance their annual revenues. The accumulation of digital data loads leads to the adoption of effective data management, thereby enhancing the adoption of the product. These factors may propel the market progress during the pandemic.

Companies Profiled in the Document Management System Market Report

Have Any Query? Ask Our Experts: https://www.fortunebusinessinsights.com/enquiry/speak-to-analyst/document-management-system-market-106615

Major Points of Table:

TOC Continued…!

About Us:

Fortune Business Insights™ offers expert corporate analysis and accurate data, helping organizations of all sizes make timely decisions. We tailor innovative solutions for our clients, assisting them to address challenges distinct to their businesses. Our goal is to empower our clients with holistic market intelligence, giving a granular overview of the market they are operating in.

Contact Us:

Fortune Business Insights™ Pvt. Ltd.

US :+1 424 253 0390

UK : +44 2071 939123

APAC : +91 744 740 1245

Email: sales@fortunebusinessinsights.com

- Published in Uncategorized

Morbi bridge collapse: Gujarat High Court seeks response from … – Bar & Bench – Indian Legal News

- Published in Uncategorized

30 DocuSign Competitors & Alternatives 2023 (Free + Paid) | by … – DataDrivenInvestor

Sign up

Sign In

Sign up

Sign In

DataDrivenInvestor

Jun 17

Save

While you are creating, editing, or sending digital documents, it is necessary to capture an online signature so that the document can be authenticated. For effectively capturing a legally binding electronic signature online, there is a need for an electronic signature service.

Without the implementation of an online signature software, you will end up exposing your company to legal troubles. One of the top electronic signature software that are used by organizations commonly is called DocuSign.

Click to the Image to Check Price

It is available at a nominal fee per month and ends up being a low-risk method to send a couple of documents per month while also getting an understanding of how online document signing actually operates.

As said, DocuSign is not the only choice that there is for an online signature software.

However, I’m just diverting your attention with this statement, DocuSign competition is a burning issue in the electronic signature software industry. Each DocuSign competitor has been trying to beat it by facilitating the user’s with same features in less budget.

Furthermore, all the DocuSign competitors that have been mentioned below provide free trial with some features. You can utilize for a days, if you are not satisfied with them. You can easily switch it and try another alternative to DocuSign.

There are multiple competitors and alternatives to DocuSign that are available in the market and they make a great choice. In this blog, I have listed top DocuSign competitors and alternatives that can make your selection convenient.

Let we start understanding of free DocuSign alternative one by one. Each DocuSign Competitor’s pricing have denoted based on the basic features that they offer. You may find out more about business and enterprise planning by visiting the software’s website.

➽ SignNow — 1st Free DocuSign Competitor & Alternative

➽ CEO — Borya Shakhnovich

➽ Mobile App: iOS | Android

➽ Location — Brookline, Massachusetts

In the list of DocuSign’s competitors, SignNow is one of the top Docusign competitor and electronic signature software for small businesses that comes with features needed to sign and send documents. It helps in generating agreements, automating and streamlining processes, accessing payments, and managing documents.

This application has reusable templates that help in simplifying the process of sending documents and saving time. When it comes to workflows, SignNow allows you to organize documents into groups and send them based on the roles of receivers.

With SignNow, it is also possible to set different actions after signing has been completed.

➽ Wesignature — Free DocuSign Competitor & Alternative

➽ CEO — Ryan Pegram

➽ Mobile App: None, web-based only

➽ Location — Thornton, Colorado

WeSignature is one of the best document signing software and most widely chosen electronic signature software at the present time.

Many professionals have been using this application for their personal and professional use which makes it the best DocuSign competitor.

It is a simple, efficient, and effortless software for signing documents as it enables individuals and organizations to sign a wide range of online documents.

Once you adopt the WeSignature application, you can sign the documents, fill up paperwork, and follow up with the receivers regularly.

It is an application that has consistently proven itself to be the best electronic signature service for small businesses.

Once you start using WeSignature, you will be surprised at the reduction of turnaround time from a couple of days to just a few minutes.

In addition, it also enables the organizations to send multiple documents to people all at once.

➽ Signaturely — Free DocuSign Competitor & Alternative

➽ CEO — Will Cannon

➽ Mobile App: None, web-based only

➽ Location — 340 S Lemon Ave Ste 1760 Walnut, CA 91789

In another competitors to DocuSign, Signaturely is well known esignature software. It is also preferred by many people who are looking for simple methods to get their documents signed in a legal manner. Signaturely has proven itself to be a great alternative to DocuSign because of its simplicity.

Signaturely is easy to use and also makes online document signing simple. The reason why Signaturely stands out is that it focuses on eliminating the features.

It lays special emphasis on cutting down all unnecessary steps so that it becomes easy to get your documents signed.

➽ CocoSign — Free DocuSign Competitor & Alternative

➽ CEO — Stephen Curry

➽ Mobile App: None, web-based only

➽ Location — Singapore

Over the past few years, CocoSign has emerged as an extremely renowned online signature platform. It is one of best DocuSign competitor and is used for sending, signing, saving, and accessing documents online.

It is capable of automating business processes by closing deals quickly, safely, and legally.

CocoSign enables users to choose a free trial for understanding how the platform should work and how useful it can be. It is easily the best place for online signatures as it improves businesses by automating significant parts of business deals. It is empowered with multiple applications, integrations, APIs, and industry-specific solutions.

CocoSign allows you to get signatures digitally without facing problems in managing paperwork. It provides a user-friendly, digital, and integrated experience for creating e-signatures.

In addition, it also offers cross-platform functionality and can be accessed anywhere. People use it because it is safe, legally compliant, and efficient

➽ HelloSign — Free DocuSign Competitor & Alternative

➽ CEO — Joseph Walla

➽ Mobile App: None, web-based only

➽ Location — San Francisco, CA

HelloSign is another online electronic signature software that is also understood to bring a wide range of features to the market. It is great with customer service, customization, and flexible pricing as well.

It also comes with a great API that enables you to embed and brand the signing options in the online documents.

This is an electronic signature company that is also compliant with all of the major online signature laws while offering an array of extensions and integrations.

It is an application owned by Dropbox and comes with powerful integration along with many tools such as Google Suite, Gmail, and more.

➽ Adobe Sign — Free DocuSign Competitor & Alternative

➽ CEO — Shantanu Narayen

➽ Mobile App: iOS | Android

➽ Location — San Jose, CA

Adobe Sign is a feature-rich among DocuSign competitors. It is an online signature platform that provides you with the power to manage the workflows from any location and device. Many people use this app because of the seamlessness that it is capable of offering in electronic document signing.

Adobe Sign is an application that is known for its wide integration with third-party tools along with an added focus on global compliance.

It is full of features for both electronic and digital signatures. Many professionals have been choosing Adobe Sign for their personal and professional use.

➽ PandaDoc — Free DocuSign Competitor & Alternative

➽ CEO — Mikita Mikado

➽ Mobile App: iOS | Android

➽ Location — San Francisco, California

Yet another top competitors to DocuSign is PandaDoc which is very well known for offering a streamlined user interface and ease of use.

This is an online signature tool that is known for providing a streamlined user interface and ease of use.

It is an e-signature tool that comes with great assistance in document management.

PandaDoc comes with a drag and drops integration, automated workflow, and audit history as well. It has multiple integrations including CRM, file storage applications, and payments.

If you are looking for an effective solution for the management of contracts then PandaDoc is worth giving a shot.

➽ RightSignature — Free DocuSign Competitor & Alternative

➽ CEO — Daryl Bernstein, Cary Dunn, and Jonathan Siegel

➽ Mobile App: None, web-based only

➽ Location — Fort Lauderdale, FL

Next DocuSign competitor, RightSignature is a perfect alternative to DocuSign that comes with a wide range of integrations as an important part of the e-signature process. It specializes in making document signing a simple process.

The users can upload online documents to RightSignature with a drag and drop tool for placing signature fields inside the document.

Once this happens, the users can send the document through an email to the customer for an optimized online signing experience. Using RightSignature offers plans for individuals and enterprise-level users as well. It provides features that are completely different as is the cost.

RightSignature also treats uploaded documents such as a locked PDF while enabling the users to drag the signature fields on the top of the page.

The custom branding with RightSignature is more like a white labeling feature as opposed to a branding kit.

➽ SignWell — Free DocuSign Competitor & Alternative

➽ CEO — Martin Holmstrom

➽ Mobile App: None, web-based only

➽ Location — Portland, Oregon

SignWell is another one of the competitors to DocuSign that is a cost-effective and user-friendly electronic signature application used by many businesses. It helps in eliminating many hours from the usual document signing process and is also compliant with e-signature laws.

This application comes with a free plan that also includes various features such as document tracking, flexible workflows, and reminders. This is an application that is being used by a wide range of people in recent times.

➽ SignEasy — Free DocuSign Competitor & Alternative

➽ CEO — Sunil Patro

➽ Mobile App: iOS | Android

➽ Location — Brookline, Massachusetts

SignEasy is yet another top recommendation for many people. It is one of the best electronic signing software for personal use. You can sign up with a free trial and you can instantly begin by uploading documents, preparing them for signatures, and sending them.

SignEasy comes with wide integration support and also works within your favorite applications. You can open a document with Gmail, sign it, and then send it without any stress.

Finally, you can also take benefit of many features such as automated reminders, tracking, and signing sequences.

➽ Eversign — Free DocuSign Competitor & Alternative

➽ CEO — Julian Zehetmayr

➽ Mobile App: None, web-based only

➽ Location — Wien, Wien

The another choice in the list for DocuSign competitors is Eversign. It is a great solution for all users who need legally binding online signatures but are not looking to break the bank with a high fee.

Eversign is a cost-effective choice that comes with the ability to send many documents per month without an added fee.

The basic features that Eversign offers are all included in audit trails, contract management, and app integrations.

The businesses that are looking to onboard more users or seeking additional perks such as in-person signing can exist without any extra price tag.

➽ DigiSigner — Free DocuSign Competitor & Alternative

➽ CEO — Jessica Kelly

➽ Mobile App: None, web-based only

➽ Location — 700 N Valley St Suite B Anaheim, CA 92801

DigiSigner is a cloud-based electronic signature software and one of the best DocuSign competitor that focuses on speed, affordability, and convenience of use.

Using the service, businesses and people can sign contracts and agreements from any location in the world, regardless of their location.

DigiSigner is compatible with a wide range of devices, including laptops, tablets, smartphones, and more.

All main e-signature laws, such as ESIGN, UETA, and European eIDAS, are met by DigiSigner.

DigiSigner’s signatures are legally binding and can be used in a court of law.

➽ SIGNiX — Free DocuSign Competitor & Alternative

➽ CEO — Jay Jumper

➽ Mobile App: None, web-based only

➽ Location — Chattanooga, TN

A next DocuSign competitor is SIGNiX, which makes it easy for partners in highly regulated industries like real estate, wealth management, and healthcare to use digital signature and online notarization software together.

There are no costs or risks to using the patented SIGNiX FLEX API.

It allows partners to offer military-grade cryptography, enhanced privacy, and permanent legal evidence of a true digital signature without having to deal with paper-based processes.

➽ Scrive — Free DocuSign Competitor & Alternative

➽ CEO — Viktor Wrede

➽ Mobile App: None, web-based only

➽ Location — Stockholm, Stockholm County

As soon as Scrive was started in 2010, it quickly became a leader in the Nordic e-sign market and take place in the list of top DocuSign competitors.

Today, more than 6000 customers in 40+ countries use Scrive to speed up their onboarding and agreements processes with solutions that use electronic signatures and IDs.

As a trusted digitalization partner, Scrive helps businesses of all sizes, even those in highly regulated industries, move forward with their digital transformations.

This includes improving customer experience, security, compliance, and data quality. Scrive is based in Stockholm and is owned by Vitruvian Partners. It has more than 200 employees.

➽ Secured Signing Software — Free DocuSign Competitor & Alternative

➽ CEO — Gal Thompson

➽ Mobile App: None, web-based only

➽ Location — 800 W. El Camino Real, Suite 180 Mountain View CA 94040

Secured Signing Software is a cloud-based service for managing electronic signatures. It works with businesses of all sizes in a wide range of industries, including finance, education, and real estate.

Users can sign documents digitally, send email invitations to complete documents, and make forms with the help of these tools.

Secured Signing lets people send invitations to a group of people. Businesses can set up reminders and add extra fields to documents to help them.

The solution lets users add electronic signatures to word documents and set up approval processes. It may also let recipients add or change text before they sign.

Secured Signing works with Salesforce, Realme, and Microsoft Dynamics 365. Face-to-face signing lets customers use an SMS code to prove their identity and then sign on the screen.

In the dashboard, users can see how long it will take to sign a form, as well as download and store signed copies of the form.

If you want to use Secured Signing’s services, you can pay for them each month or pay as you go.

Customer service is available through an online ticketing system, an online knowledge base, phone, and email, among other ways.

➽ eSign Genie — Free DocuSign Competitor & Alternative

➽ CEO — Mahender Bist

➽ Mobile App: None, web-based only

➽ Location — Cupertino, California

This electronic document signing service is actually quite expensive, despite the fact that it appears to be a bargain at first glance. Esign Genie is a splendid choice among top notch DocuSign competitors, despite its low price, is packed with capabilities that make the e-signing process more convenient for both signers and organizations.

The process of connecting to eSign Genie is as simple as creating a network connection and using the form-signing tools.

eSign Genie can help you collect document signing for a fraction of the cost of competing products like PandaDoc and GetAccept.

When it comes to the business, pay-as-you-go is one of the most intriguing aspects. This package also includes the ability to sign papers in person and assign signers, two features that are often reserved for more expensive e-signature services.

➽ SignRequest — Free DocuSign Competitor & Alternative

➽ CEO — Geert-Jan Persoon

➽ Mobile App: None, web-based only

➽ Location — Amsterdam, Noord-Holland

SignRequest appears to offer a lot of capability and customizability for senders who need to transmit multiple papers each month. When creating papers for multiple signers, the professional plan comes with features including a post-signature landing page and the ability to alter the document signing sequence.

This platform is ideal for small-business owners who don’t need to transmit a lot of documentation each month, and it offers everything you need.

You can easily see what paperwork has yet to be completed and what documentation has already been finished thanks to SignRequest’s document management features.

When it comes to creating templates, collecting signer attachments, and selecting the authentication mechanism your signatures may use, SignRequest is the best document signing software for businesses or anyone searching for a quick and easy method to sign.

➽ DrySign — Free DocuSign Competitor & Alternative

➽ CEO — Ron Cogburn

➽ Mobile App: None, web-based only

➽ Location — 2701 E. Grauwyler Road Irving, TX 75061, USA

Due to its cloud-based best electronic signing software for company, it may assist speed up internal and external sign-offs, reduce the need for paper procedures, and increase team efficiency.

It is possible to use DrySign with any of the following cloud storage services: Google Drive, Dropbox, OneDrive, and CRM. It can be utilized on a variety of platforms, including PCs, laptops, and even handheld devices.

The ESIGN Act and the UETA are among the electronic signing regulations that this method complies with. DrySign provides an audit trail, multi-factor authentication, and smart tracking to assist businesses reduce the risk connected with their transactions.

All documents and activity can be viewed from the dashboard. Multiple signatures can be requested, automated notifications can be set up, changes can be viewed in real time, document fields can be altered, and bulk files can be uploaded.

➽ KeepSolid Sign — Free DocuSign Competitor & Alternative

➽ CEO — Vasiliy Ivanov

➽ Mobile App: None, web-based only

➽ Location — Bronx, New York

Various documents, such as contracts, transactions, and other agreements, can be signed electronically with KeepSolid Sign. In either case, it is possible to set up this product.

As compared to DocuSign, KeepSolid Sign allows you to sync documents between several platforms, including PCs, tablets, and cellphones. The data in the system is encrypted with AES-256. Apps for iOS and Android devices are also available from the company.

Signing and annotating documents without being online is feasible, and the changes you make are saved. In order to keep track of the progress of a document, the service provides an activity dashboard that may be used by the user.

➽ Formstack Sign — Free DocuSign Competitor & Alternative

➽ CEO — Chris Byers

➽ Mobile App: iOS | Android

➽ Location — Fishers, IN

In the DocuSign competition, Formstack Sign is an e-sign document platform built inside the Formstack data management system, allows users to sign documents electronically.

Filling out surveys and applying for jobs are just two examples of online forms that Formstack Sign is well-suited to handle because of its accessibility and signature automation features.

It is possible to trace the origins of the company back to Ade Olonoh, who founded FormSpring on February 28th, 2006. FormSpring predated FormStack.

Initially, it was designed to be an online form builder that also provided workflow management solutions for businesses like higher education and marketing.

An added benefit is that the integration of more than 50 web applications such as customer relationship management (CRM), email marketing (email marketing), payment processing and document management is available to Formstack customers who don’t have programming or software abilities.

➽ ZorroSign — Free DocuSign Competitor & Alternative

➽ CEO — Shamsh Hadi

➽ Mobile App: iOS | Android

➽ Location — Phoenix, AZ

Document-based transactions, such as payroll and employee onboarding, can be managed with ZorroSign. It is a cloud-based electronic signature and digital transaction management solution.

ZorroSign uses proprietary forensics technologies to identify document forgery and signature forgery based on Blockchain technology.

If you’re looking for a document signing software that’s more secure than some of DocuSign alternative, such as government, legal, or healthcare, this is an excellent option. ZorroSign is also an environmentally responsible company as compared to other DocuSign Competitors.

➽ PDF Filler — Free DocuSign Competitor & Alternative

➽ CEO — Boris Shakhnovich

➽ Mobile App: iOS | Android

➽ Location —Brookline, Massachusetts

For editing, producing, signing, and maintaining PDF files online, pdfFiller is the best option available. It is one of the greatest alternative to DocuSign.

Using it since 2008, it has made it easier for companies and people to go paperless!

Additionally, pdfFiller is part of the airSlate Business Cloud, a simple bundle that offers a variety of useful services.

➽ Dochub — Free DocuSign Competitor & Alternative

➽ CEO — Chris Devor

➽ Mobile App: None, web-based only

➽ Location — Boston

Online PDF annotation and best electronic signing software, Dochub, allows users to add text and photos to their PDFs online. Multi-signer procedures, document signing in bulk, lossless editing, team collaboration, and more are all possible with this program.

The low cost of this program makes it a standout in comparison to DocuSign. The process of setting up and transmitting documents to users has been made simpler.

With DocHub’s editing features and the option to keep multiple signatures on several devices, it’s easy to collaborate with others. As a result of this functionality, it is a significant DocuSign competitor.

➽ EasySIGN— Free DocuSign Competitor & Alternative

➽ CEO — Not Found

➽ Mobile App: None, web-based only

➽ Location — Hapert, The Netherlands

They are proud to say that EasySIGN is acknowledged as a real one-stop-shop software solution for the signmaking and digital big format printing businesses worldwide.

The software provides unique, non-destructive design and production capabilities that are both inventive and easy to use in order to convert creative ideas into production-ready realities.

It offers a worldwide network of resellers that are experts in the field. More than 25 nations use the program, which has been translated into numerous languages and delivered to a dedicated clientele.

➽ Signority— Free DocuSign Competitor & Alternative

➽ CEO —Jane He

➽ Mobile App: None, web-based only

➽ Location — ON Canada

When you use Signority, the eSignature process is completely automated and your document management costs are much reduced. This allows you to focus more on your business.

Electronic signatures and reminders are both possible with the software, which delivers papers for digital signature and eSignature. All of your papers may be securely shared and kept in the cloud after they have been safely changed.

It enables process automation, corporate branding, real-time status alerts and traceability, and a host of additional benefits.

➽ Contract Book — Free DocuSign Competitor & Alternative

➽ CEO —Niels Martin Brochner

➽ Mobile App: None, web-based only

➽ Location — New York, USA

Contractbook is a collaborative contract management platform that automates your process and syncs contract data across your corporate platforms. It is also a good document signing software as well as DocuSign competitor.

You may use it to handle contracts effectively. All forms of legal papers may be signed, created, and stored digitally with this program. It also aids in the creation of a more open company environment.

Compliance is guaranteed and time is saved thanks to the software Using the solution, legal practitioners may monitor and manage their client’s contract online with ease and security.

➽ Proposify — Free DocuSign Competitor & Alternative

➽ CEO — Kyle Racki

➽ Mobile App: None, web-based only

➽ Location — Halifax, Nova Scotia

It’s a web-based proposal management system. By using Proposify, you can take charge of and gain insight into the most important portion of the sales process. Develop your self-assurance and flexibility to the point where you can dictate the terms of any business transaction.

Produce flawless and consistent sales materials. Get the info you need to expand your process, make appropriate commitments, and develop accurate forecasts. Streamline the approval procedure for your clients and new clients.

This competitor to DocuSign offers a wide variety of features, including an easy-to-use design editor, electronic signatures, CRM integration, data-driven insights, dynamic pricing and packaging, document management, approval workflows, and more.

➽ Qwilr — Free DocuSign Competitor & Alternative

➽ CEO — Dylan Baskind

➽ Mobile App: None, web-based only

➽ Location — Redfern, New South Wales

Qwilr intends to completely alter the online paper-making and distribution processes for corporations. With this innovation, companies can easily convert static web material into interactive, user-friendly mobile experiences. According to the vendor, this enables businesses to provide clients with quotes, proposals, and presentations while also taking advantage of analytics and other capabilities.

Increase your company’s sales output by freeing your sales staff from mundane duties like copying and pasting and allowing them to focus on more strategic initiatives. Build a database of documents that can be readily updated when you receive new contacts. Effective real-time collaboration is made simpler with intuitive commenting and discussion features.

Provide a quote that can be modified in real time, signed digitally, and used in other ways to meet the individual requirements of each client. Without leaving the Qwilr app, your clients can give final approval by checking boxes and providing comments.

Qwilr, like other DocuSign competitors, is a web-based document editor that could help your company save time and look more professional when communicating with customers.

➽ DealHub — Free DocuSign Competitor & Alternative

➽ CEO —Eyal Elbahary

➽ Mobile App: None, web-based only

➽ Location — Austin, Texas

The next notable DocuSign competitor is DealHub, which offers your business the most complete and unified revenue workflow available. Our zero-code platform is designed specifically to aid visionary leaders in improving cooperation, speeding up the sales cycle, and maintaining a steady flow of leads into the pipeline.

With the support of CPQ, CLM, and Subscription Management tools, which are all driven by an intuitive Sales Playbook, you can speed up the contract negotiation process, improve content delivery, and clinch more deals. A digital DealRoom is a platform where buyers and sellers can connect and exchange information in one convenient location.

Market leaders such as WalkMe, Gong, Drift, Hopin, Yotpo, Sendoso, and Braze are using DealHub to reduce their time to revenue and deliver a consistent sales experience for their sales teams and customers.

➽ DocSend— Free DocuSign Competitor & Alternative

➽ CEO — Russ Heddleston

➽ Mobile App: None, web-based only

➽ Location —San Francisco, CA

Sharing and managing the information that drives your business forward has never been easier than with Dropbox DocSend. Dropbox DocSend’s link-based approach makes it simple to tailor security to each recipient, track file views in real-time, evaluate content performance on a page-by-page basis, and establish up state-of-the-art virtual deal rooms. DocSend, like DocuSign’s competitors, is an e-signature service that may be used by any company.

The above-mentioned are some of the best DocuSign competitors that can help you to figure out which option suits your needs in the best ways. Many online signature software that have been discussed offer a free version so that the customers can find an easy and perfect solution.

If you ask our opinion then Signnow and WeSignature is the best option. It is the best one of the top online electronic signature software that come with a wide range of features. You can use this software to send unlimited documents while professionally designing documents for any purpose and numerous integrations.

You can begin a free trial and see how SignNow and WeSignature can help.

If you’re only searching for a way to submit and sign your electronic papers, DocuSign is fantastic. However, teams who wish to do more with their documents will find it to be a poor fit.

Here are some of the reasons why you might want to look at one of DocuSign’s competitors.

The market for electronic signatures is constantly changing.

Other significant e-signing hubs including SignNow, Wesignature, Signaturely, and PandaDoc are currently greatest DocuSign’s competitors.

On the market, there are several eSignature service providers, all of them provide slightly unique products. It can be difficult to decide which eSignature solutions will be most useful for you and which could be a waste of money, though, given the abundance of options. Prior to deciding on a DocuSign competitors for your company, consider the following points:

Finding the ideal DocuSign competitors for you will be much simpler now that you are aware of the answers to those concerns.

There are cheaper competitors to DocuSign, and SignNow is one of them. If your company needs an easy way to collect valid electronic signatures, look no further than SignNow eSignature software.

The excellent DocuSign competitor is SignNow. When you use SignNow to send out documents for signature, you may send a lot more of them at a much reduced cost. Use it more easily and get better customer service.

Subscribe to DDIntel Here.

Join our network here: https://datadriveninvestor.com/collaborate

—

—

1

empowerment through data, knowledge, and expertise. subscribe to DDIntel at https://ddintel.datadriveninvestor.com

AboutHelpTermsPrivacy

I’m interested by human creativity and technology. Nature enthusiast, self-motivator, visionary, and energetic communicator. Email me: tobykiernan1984@gmail.com

Help

Status

Writers

Blog

Careers

Privacy

Terms

About

Text to speech

- Published in Uncategorized

Brother ADS-3100 High-Speed Desktop Scanner Review – PCMag

Straight-up document scanning, basic connectivity

I focus on printer and scanner technology and reviews. I have been writing about computer technology since well before the advent of the internet. I have authored or co-authored 20 books—including titles in the popular Bible, Secrets, and For Dummies series—on digital design and desktop publishing software applications. My published expertise in those areas includes Adobe Acrobat, Adobe Photoshop, and QuarkXPress, as well as prepress imaging technology. (Over my long career, though, I have covered many aspects of IT.)

Brother's entry-level ADS-3100 is a capable sheetfed document scanner that's a good value for home, hybrid, or small offices and workgroups.

At the bottom of the pecking order in Brother’s recent release of a wave of sheetfed desktop document scanners, its ADS-3100 High-Speed Desktop Scanner ($329.99) is relatively fast and reliably accurate. It lists for about $40 less than the next model up, the ADS-3300W, but you give up quite a lot for the savings: network connectivity, a touch-screen control panel, and support for smartphones and other handheld devices, to name a few convenience and productivity features. That’s not to say, however, that small or home offices that plug the ADS-3100 into a solitary computer’s USB port (or scan directly to a USB flash, solid-state, or hard drive) won’t get good value from this scanner. Competition among entry-level and mid-volume document scanners is formidable, but if your business doesn’t demand networkability or wireless scanning, this Brother model should serve you well.

The ADS-3100 is the last of five new sheetfed document scanners from Brother to reach our test bench. The ADS-4900W is the high-volume flagship (and an Editors’ Choice award winner), and the midrange ADS-4700W, ADS-4300N, and ADS-3300W are excellent as well. They are all the same size. The five Brothers measure 7.5 by 11.7 by 8.5 inches (HWD) with their paper trays closed—like most scanners in this category, they double or triple their desktop footprint with trays extended for use—and weigh 6.2 to 6.5 pounds each.

This scanner has too many direct competitors to list here, so we’ll name just four key ones: the Fujitsu ScanSnap iX1400, the Epson WorkForce ES-400 II, the Raven Select Document Scanner, and the Canon imageFormula R50 Office Docment Scanner. The ADS-3100 falls short of some rivals by lacking a color touch screen. Its control panel holds only four buttons (Power, Stop/Cancel, Scan to USB, Scan to PC) and a few LED status indicators. This and the ADS-4300N are the only members of the new Brother quintet to lack touch screens.

You can scan to a variety of PDF types (high-compression, image, searchable, secure, or signed), as well as PDF/A, single-page and multipage TIFF, BMP, plain text, and Microsoft Word, Excel, and PowerPoint formats. The scanner’s maximum resolution is 600 dots per inch (1,200dpi interpolated), and it supports document sizes ranging from 2 inches square to 8.5 inches wide by 16.4 feet long, with 24-bit color depth.

Online scanning destinations include cloud and social media sites and FTP sites, as well as local drives and email. It’s easy to access most social media and cloud sites, though the ADS-3100 is preconfigured to support Google Drive, OneDrive, Evernote, Box, Dropbox, OneNote, SharePoint Online, and Expensify.

The ADS-3100 features a 60-sheet automatic document feeder (ADF) for sending single- and double-sided multipage documents to the scanner. Brother rates its daily duty cycle at 6,000 scans. These specs match the ADS-4300N’s; the ADS-4700W has a larger 80-page ADF, while the more robust ADS-4900W combines a 100-sheet feeder and 9,000-scan daily duty cycle.

These specs are more or less average among low-end document scanners. The Raven Select, Epson ES-400 II, and ScanSnap iX1400 all have 50-sheet ADFs, while the Canon R50’s holds 60 sheets. The Fujitsu has the same 6,000-scan duty rating as the Brother; the Epson and Canon are rated for 4,000 and the Raven for only 2,000 daily scans.

Of the five new Brother document scanners, the ADS-3100 is the only one without a wired or wireless network interface—its sole connectivity option, apart from the USB Type-A port around back for scanning directly to storage devices, is a USB 3.0 (or 2.0) cable. That leaves out smartphones and tablets.

The software bundle doesn’t reflect this printer’s lower-end status, though. It includes Brother iPrint&Scan (desktop) for Windows and Mac, Brother ScanEssentials Lite for Windows, Kofax Power PDF for Windows, Presto! BizCard for Windows and Mac, Image Folio Processing Software for Windows, and Kofax PaperPort SE with OCR for Windows.

iPrint&Scan is an all-in-one printer interface that’s also compatible with Brother’s single-function scanners and printers. It lets you create and manage workflow profiles that you can choose with the front-panel buttons.

ScanEssentials Lite, meanwhile, is a trimmed-down version of another Brother scanner interface that’s also a document-management and financial-data archiving application. Kofax PaperPort also combines a scanner interface with document-management features, among them its own optical character recognition (OCR), workflow profiles, and automated naming conventions.

Presto! BizCard is what it sounds like, a business-card-scanning and contact-archiving program, and Image Folio is an image-capturing program designed to help you scan, edit, enhance, and print photos. You also get four third-party drivers—TWAIN, WIA, ISIS, and Sane—for scanning directly into many compatible applications, such as Adobe Acrobat and Photoshop and the Microsoft 365 suite.

Like its siblings (barring the flagship ADS-4900W), the ADS-3100 is rated at 40 one-sided (simplex) pages per minute (ppm) and 80 two-sided (duplex) images per minute (ipm, where each page side is counted as an image). I put those speed figures to the test using iPrint&Scan over a USB connection to our Intel Core i5 testbed running Windows 10 Pro. (I also ran a few tests with some of the other apps and got similar results, though scanning to USB flash drives is notably faster; see more about how we test scanners.)

First, I clocked the ADS-3100 as it scanned our standard one-sided 25-page and two-sided 25-page (50 sides) documents and then converted and saved them as image PDF files. The scanner managed 41.6ppm and 81.3ipm, barely beating its ratings. The competitors mentioned here did similarly, with the ADS-4900W being faster (68.7ppm and 125.4ipm) and the Epson ES-400 II being slower (37.7ppm and 66.7ipm).

Next, I timed the Brother as it scanned our two-sided, 25-page hard-copy document and saved it to the more versatile searchable PDF format. The ADS-3100 finished the job in 38 seconds, on the high side of average. The ADS-4900W and the HP ScanJet Pro N4000 snw1 took 34 seconds and 24 seconds respectively, while the Canon R50 took 37 seconds. The Fujitsu made it in 40 seconds, versus 41 for the Raven Select and 44 for the Epson. Frankly, unless you spend most of your day scanning stacks of lengthy documents, these scores should be fast enough for most offices.

Besides, how well a scanner reads text is more important than how quickly it does so. Most document scanners today can convert printed text to searchable PDF format with no errors down to 5 or 6 points. The Brother ADS-3100 was perfectly average, managing scans down to 6-point type problem-free in both our Arial and Times New Roman font tests. Of the other machines discussed here, only the Canon R50 yielded a different result: down to 5 points for Arial, and 6 points for Times New Roman. You’re not likely to encounter text smaller than 10 points in most real-world business documents.

I also scanned a few stacks of business cards into Presto! BizCard, with predictable results: The software does a fine job of digitizing text and figures and putting them into the proper fields in a contacts database (or exporting them to Outlook, Gmail, and other contact management or personal information manager apps). Finally, to see how well the Brother handled images, I scanned several photos to Image Folio. Most were well-detailed, with bright and accurate colors, showing the ADS-3100 can serve as a decent sheetfed photo scanner or alternative to the Epson FastFoto FF-680W and Canon imageFormula RS40. If you have a shoebox of photos stashed under the bed or on a closet shelf, the Image Folio software should deliver additional value.

If you can live without networked or mobile scanning, the Brother ADS-3100 may be right for you, especially if you also have a bunch of photos to archive (though it’s more of a document than a photo scanner). Without wireless or Ethernet connectivity, however, it’s really more of a personal machine for relatively low scanning volumes, around a few hundred per day. (You’d have to fill its 60-sheet ADF 100 times per day, or 14 times per hour, to reach its 6,000-scan limit.) Under the right conditions, though, the ADS-3100 is without question a fine entry-level-to-midrange document scanner.

Brother's entry-level ADS-3100 is a capable sheetfed document scanner that's a good value for home, hybrid, or small offices and workgroups.

Sign up for Lab Report to get the latest reviews and top product advice delivered right to your inbox.

This newsletter may contain advertising, deals, or affiliate links. Subscribing to a newsletter indicates your consent to our Terms of Use and Privacy Policy. You may unsubscribe from the newsletters at any time.

Your subscription has been confirmed. Keep an eye on your inbox!

Advertisement

I focus on printer and scanner technology and reviews. I have been writing about computer technology since well before the advent of the internet. I have authored or co-authored 20 books—including titles in the popular Bible, Secrets, and For Dummies series—on digital design and desktop publishing software applications. My published expertise in those areas includes Adobe Acrobat, Adobe Photoshop, and QuarkXPress, as well as prepress imaging technology. (Over my long career, though, I have covered many aspects of IT.)

In addition to writing hundreds of articles for PCMag, over the years I have also written for many other computer and business publications, among them Computer Shopper, Digital Trends, MacUser, PC World, The Wirecutter, and Windows Magazine. I also served as the Printers and Scanners Expert at About.com (now Lifewire).

Read William’s full bio

Advertisement

PCMag.com is a leading authority on technology, delivering lab-based, independent reviews of the latest products and services. Our expert industry analysis and practical solutions help you make better buying decisions and get more from technology.

PCMag supports Group Black and its mission to increase greater diversity in media voices and media ownerships.

© 1996-2022 Ziff Davis, LLC., a Ziff Davis company. All Rights Reserved.

PCMag, PCMag.com and PC Magazine are among the federally registered trademarks of Ziff Davis and may not be used by third parties without explicit permission. The display of third-party trademarks and trade names on this site does not necessarily indicate any affiliation or the endorsement of PCMag. If you click an affiliate link and buy a product or service, we may be paid a fee by that merchant.

- Published in Uncategorized

Technology readiness levels for machine learning systems – Nature.com

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

Advertisement

Carousel with three slides shown at a time. Use the Previous and Next buttons to navigate three slides at a time, or the slide dot buttons at the end to jump three slides at a time.

24 May 2022

Matej Petković, Luke Lucas, … Dragi Kocev

22 May 2020

Miguel Luengo-Oroz, Katherine Hoffmann Pham, … Bernardo Mariano

13 April 2020

Julia Stoyanovich, Jay J. Van Bavel & Tessa V. West

20 January 2022

Tirtharaj Dash, Sharad Chitlangia, … Ashwin Srinivasan

07 May 2019

A. Gonoskov, E. Wallin, … I. Meyerov

06 November 2020

Jayaraman J. Thiagarajan, Bindya Venkatesh, … Brian Spears

16 July 2021

Ken Hasselmann, Antoine Ligot, … Mauro Birattari

03 February 2020

Rafael A. Calvo, Dorian Peters & Stephen Cave

18 July 2018

Jarosław M. Granda, Liva Donina, … Leroy Cronin

Nature Communications volume 13, Article number: 6039 (2022)

14k

54

Metrics details

The development and deployment of machine learning systems can be executed easily with modern tools, but the process is typically rushed and means-to-an-end. Lack of diligence can lead to technical debt, scope creep and misaligned objectives, model misuse and failures, and expensive consequences. Engineering systems, on the other hand, follow well-defined processes and testing standards to streamline development for high-quality, reliable results. The extreme is spacecraft systems, with mission critical measures and robustness throughout the process. Drawing on experience in both spacecraft engineering and machine learning (research through product across domain areas), we’ve developed a proven systems engineering approach for machine learning and artificial intelligence: the Machine Learning Technology Readiness Levels framework defines a principled process to ensure robust, reliable, and responsible systems while being streamlined for machine learning workflows, including key distinctions from traditional software engineering, and a lingua franca for people across teams and organizations to work collaboratively on machine learning and artificial intelligence technologies. Here we describe the framework and elucidate with use-cases from physics research to computer vision apps to medical diagnostics.

The accelerating use of artificial intelligence (AI) and machine learning (ML) technologies in systems of software, hardware, data, and people introduce vulnerabilities and risks due to dynamic and unreliable behaviors; fundamentally, ML systems learn from data, introducing known and unknown challenges in how these systems behave and interact with their environment. Currently, the approach to building AI technologies is siloed: models and algorithms are developed in testbeds isolated from real-world environments, and without the context of larger systems or broader products they will be integrated within for deployment. The main concern is models are typically trained and tested on only a handful of curated datasets, without measures and safeguards for future scenarios, and oblivious of the downstream tasks and users. Even more, models and algorithms are often integrated into a software stack without regard for the inherent stochasticity and failure modes of the hidden ML components. Consider the massive effect random seeds have on deep reinforcement learning model performance1, for instance.

Other domains of engineering, such as civil and aerospace, follow well-defined processes and testing standards to streamline development for high-quality, reliable results. Technology Readiness Level (TRL) is a systems engineering protocol for deep tech2 and scientific endeavors at scale, ideal for integrating many interdependent components and cross-functional teams of people. It is no surprise that TRL is a standard process and parlance in NASA3 and DARPA4.

For a spaceflight project, there are several defined phases, from pre-concept to prototyping to deployed operations to end-of-life, each with a series of exacting development cycles and reviews. This is in stark contrast to common machine learning and software workflows, which promote quick iteration, rapid deployment, and simple linear progressions. Yet the NASA technology readiness process for spacecraft systems is overkill; we need robust ML technologies integrated with larger systems of software, hardware, data, and humans, but not necessarily for missions to Mars. We aim to bring systems engineering to AI and ML by defining and putting into action a lean Machine Learning Technology Readiness Levels (MLTRL) framework. We draw on decades of AI and ML development, from research through production, across domains and diverse data scenarios: for example, computer vision in medical diagnostics and consumer apps, automation in self-driving vehicles and factory robotics, tools for scientific discovery and causal inference, streaming time-series in predictive maintenance and finance.

In this paper, we define our framework for developing and deploying robust, reliable, and responsible ML and data systems, with several real test cases of advancing models and algorithms from R&D through productization and deployment, including essential data considerations—Fig. 1 illustrates the overall MLTRL process. Additionally, MLTRL prioritizes the role of AI ethics and fairness, and our systems AI approach can help curb the large societal issues that can result from poorly deployed and maintained AI and ML technologies, such as the automation of systemic human bias, denial of individual autonomy, and unjustifiable outcomes (see the Alan Turing Institute Report on Ethical AI5). The adoption and proliferation of MLTRL provide a common nomenclature and metric across teams and industries. The standardization of MLTRL across the AI industry should help teams and organizations develop principled, safe, and trusted technologies.

Most ML workflows prescribe an isolated, linear process of data processing, training, testing, and serving a model37. Those workflows fail to define how ML development must iterate over that basic process to become more mature and robust, and how to integrate with a much larger system of software, hardware, data, and people. Not to mention MLTRL continues beyond deployment: monitoring and feedback cycles are important for continuous reliability and improvement over the product lifetime.

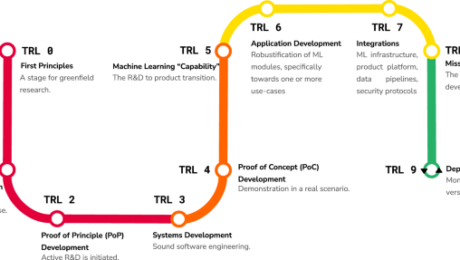

MLTRL defines technology readiness levels (TRLs) to guide and communicate machine learning and artificial intelligence (ML/AI) development and deployment. A TRL represents the maturity of a model or algorithm, data pipelines, software module, or composition thereof; a typical ML system consists of many interconnected subsystems and components, and the TRL of the system is the lowest level of its constituent parts6. Note we use “model” and “algorithm” somewhat interchangeably when referring to the technology under development. The same MLTRL process and methods apply for a machine translation model and for an A/B testing algorithm, for example. The anatomy of a level is marked by gated reviews, evolving working groups, requirements documentation with risk calculations, progressive code and testing standards, and deliverables such as TRL Cards (Fig. 2) and ethics checklists. Templates and examples for MLTRL deliverables will be open-sourced upon publication at ai-infrastructure.org/mltrl. These components—which are crucial for implementing the levels in a systematic fashion—as well as MLTRL metrics and methods are concretely described in examples and in the “Methods” section. Lastly, to emphasize the importance of data tasks in ML, from data curation7 to data governance8, we state several important data considerations at each MLTRL level.

Here is an example reflecting a neuropathology machine vision use-case22, detailed in the “Discussion” section. Note this is a subset of a full TRL Card, which in reality lives as a full document in an internal wiki. Notice the card clearly communicates the data sources, versions, and assumptions. This helps mitigate invalid assumptions about performance and generalizability when moving from R&D to production and promotes the use of real-world data earlier in the project lifecycle. We recommend documenting datasets thoroughly with semantic versioning and tools such as datasheets for datasets76, and following data accountability best practices as they evolve (see ref. 81).

The levels are briefly defined as follows and in Fig. 1, and elucidated with real-world examples later.

This is a stage for greenfield AI research, initiated with a novel idea, guiding question, or poking at a problem from new angles. The work mainly consists of literature review, building mathematical foundations, white-boarding concepts, and algorithms, and building an understanding of the data—for work in theoretical AI and ML, however, there will not yet be data to work with (for example, a novel algorithm for Bayesian optimization9, which could eventually be used for many domains and datasets). The outcome of Level 0 is a set of concrete ideas with sound mathematical formulation, to pursue through low-level experimentation in the next stage. When relevant, this level expects conclusions about data readiness, including strategies for getting the data to be suitable for the specific ML task. To graduate, the basic principles, hypotheses, data readiness, and research plans need to be stated, referencing relevant literature. With graduation, a TRL Card should be started to succinctly document the methods and insights thus far—this key MLTRL deliverable is detailed in the “Methods” section and Fig. 2.

Level 0 data—Not a hard requirement at this stage as it is largely relevant to theoretical machine learning. That being said, data availability needs to be considered for defining any research project to move past theory.

Level 0 review—The reviewer here is solely the lead of the research lab or team, for instance, a Ph.D. supervisor. We assess hypotheses and explorations for mathematical validity and potential novelty or utility, not necessarily code nor end-to-end experiment results.

To progress from basic principles to practical use, we design and run low-level experiments to analyze specific model or algorithm properties (rather than end-to-end runs for a performance benchmark score). This involves the collection and processing of sample data to train and evaluate the model. This sample data need not be the full data; it may be a smaller sample that is currently available or more convenient to collect. In some cases it may suffice to use synthetic data as the representative sample—in the medical domain, for example, acquiring datasets can take many months due to security and privacy constraints, so generating sample data can mitigate this blocker from early ML development. Further, working with the sample data provides a blueprint for the data collection and processing pipeline (including answering whether it is even possible to collect all necessary data), that can be scaled up for the next steps. The experiments, good results or not, and mathematical foundations need to pass a review process with fellow researchers before graduating to Level 2. The application is still speculative, but through comparison studies and analyses, we start to understand if/how/where the technology offers potential improvements and utility. Code is research-caliber: The aim here is to be quick and dirty, moving fast through iterations of experiments. Hacky code is okay, and full test coverage is actually discouraged, as long as the overall codebase is organized and maintainable. It is important to start semantic versioning practices early in the project lifecycle, which should cover code, models, and datasets. This is crucial for retrospectives and reproducibility, issues that can be costly and severe at later stages. This versioning information and additional progress should be reported on the TRL Card (see, for example, Fig. 2).

Level 1 data—At minimum, we work with sample data that is representative of downstream real datasets, which can be a subset of real data, synthetic data, or both. Beyond driving low-level ML experiments, the sample data forces us to consider data acquisition and processing strategies at an early stage before it becomes a blocker later.

Level 1 review—The panel for this gated review is entirely members of the research team, reviewing for scientific rigor in early experimentation, and pointing to important concepts and prior work from their respective areas of expertise. There may be several iterations of feedback and additional experiments.

Active R&D is initiated, mainly by developing and running in testbeds: simulated environments and/or simulated data that closely matches the conditions and data of real scenarios—note these are driven by model-specific technical goals, not necessarily application or product goals (yet). An important deliverable at this stage is the formal research requirements document (with well-specified verification and validation (V&V10) steps). A requirement is a singular documented physical or functional need that a particular design, product, or process aims to satisfy. Requirements aim to specify all stakeholders’ needs while not specifying a specific solution. Definitions are incomplete without corresponding measures for verification and validation (V&V). Verification: Are we building the product right? Validation: Are we building the right product?10 Here is one of several key decision points in the broader process: The R&D team considers several paths forward and sets the course: (A) prototype development towards Level 3, (B) continued R&D for longer-term research initiatives and/or publications, or some combination of A and B. We find the culmination of this stage is often a bifurcation: some work moves to applied ML, while some circles back for more research. This common MLTRL cycle is an instance of the non-monotonic discovery switchback mechanism (detailed in the “Methods” section and Fig. 3).

The difference is the former is circumstantial while the latter is predefined in the process. The other embedded switchback we define in the main MLTRL process is from level 9 to 4, shown in Fig. 4. While it is true that the majority of ML projects start at a reasonable readiness out of the box, e.g. level 4, this can make it challenging and problematic to switchback to R& D levels that the team have not encountered and may not be equipped for. In the right diagram we show a common review switchback from Level 5 to 4 (staying in the prototyping phase (orange)), and a switchback (faded) that should not be implemented because the prior level was not explicitly done; level 2 is squarely in the research pipeline (red).

Level 2 data—Datasets at this stage may include publicly available benchmark datasets, semi-simulated data based on the data sample in Level 1, or fully simulated data based on certain assumptions about the potential deployment environments. The data should allow researchers to characterize model properties, and highlight corner cases or boundary conditions, in order to justify the utility of continuing R&D on the model.

Level 2 review—To graduate from the PoP stage, the technology needs to satisfy research claims made in previous stages (brought to bear by the aforementioned PoP data in both quantitative and qualitative ways) with the analyses well-documented and reproducible.

Here we have checkpoints that push code development towards interoperability, reliability, maintainability, extensibility, and scalability. Code becomes prototype-caliber: A significant step up from research code in robustness and cleanliness. This needs to be well-designed, well-architected for dataflow and interfaces, generally covered by unit and integration tests, meet team style standards, and sufficiently documented. Note the programmers’ mentality remains that this code will someday be refactored/scrapped for productization; prototype code is relatively primitive with regard to the efficiency and reliability of the eventual system. With the transition to Level 4 and proof-of-concept mode, the working group should evolve to include product engineering to help define service-level agreements and objectives (SLAs and SLOs) of the eventual production system.

Level 3 data—For the most part consistent with Level 2; in general, the previous level review can elucidate potential gaps in data coverage and robustness to be addressed in the subsequent level. However, for test suites developed at this stage, it is useful to define dedicated subsets of the experiment data as default testing sources, as well as set up mock data for specific functionalities and scenarios to be tested.

Level 3 review—Teammates from applied AI and engineering are brought into the review to focus on sound software practices, interfaces and documentation for future development, and version control for models and datasets. There are likely domain- or organization-specific data management considerations going forward that this review should point out—e.g. standards for data tracking and compliance in healthcare11.

This stage is the seed of application-driven development; for many organizations this is the first touch-point with product managers and stakeholders beyond the R&D group. Thus TRL Cards and requirements documentation are instrumental in communicating the project status and onboarding new people. The aim is to demonstrate the technology in a real scenario: quick proof-of-concept examples are developed to explore candidate application areas and communicate the quantitative and qualitative results. It is essential to use real and representative data for these potential applications. Thus data engineering for the PoC largely involves scaling up the data collection and processing from Level 1, which may include collecting new data or processing all available data using scaled experiment pipelines from Level 3. In some scenarios, there will be new datasets brought in for the PoC, for example, from an external research partner as a means of validation. Hand-in-hand with the evolution from sample to real data, the experiment metrics should evolve from ML research to the applied setting: proof-of-concept evaluations should quantify model and algorithm performance (e.g., precision and recall and various data splits), computational costs (e.g., CPU vs. GPU runtimes), and also metrics that are more relevant to the eventual end-user (e.g., number of false positives in the top-N predictions of a recommender system). We find this PoC exploration reveals specific differences between clean and controlled research data versus noisy and stochastic real-world data. The issues can be readily identified because of the well-defined distinctions between those development stages in MLTRL, and then targeted for further development.

AI ethics processes vary across organizations, but all should engage in ethics conversations at this stage, including ethics of data collection, and the potential of any harm or discriminatory impacts due to the model (as the AI capabilities and datasets are known). MLTRL requires ethics considerations to be reported on TRL Cards at all stages, which generally link to an extended ethics checklist. The key decision point here is to push onward with application development or not. It is common to pause projects that pass Level 4 review, waiting for a better time to dedicate resources, and/or pull the technology into a different project.

Level 4 data—Unlike the previous stages, having real-world and representative data is critical for the PoC; even with methods for verifying that data distributions in synthetic data reliably mirror those of real data, sufficient confidence in the technology must be achieved with real-world data of the use-case. Further, one must consider how to obtain high-quality and consistent data required for the future model inference: generation of the data pipeline PoC that will resemble the future inference pipeline that will take data from intended sources, transform it into features, and send it to the model for inference.

Level 4 review—Demonstrate the utility towards one or more practical applications (each with multiple datasets), taking care to communicate assumptions and limitations, and again reviewing data-readiness: evaluating the real-world data for quality, validity, and availability. The review also evaluates security and privacy considerations—defining these in the requirements document with risk quantification is a useful mechanism for mitigating potential issues (discussed further in the Methods section).

At this stage the technology is more than an isolated model or algorithm, it is a specific capability. For instance, producing depth images from stereo vision sensors on a mobile robot is a real-world capability beyond the isolated ML technique of self-supervised learning for RGB stereo disparity estimation. In many organizations, this represents a technology transition or handoff from R&D to productization. MLTRL makes this transition explicit, evolving the requisite work, guiding documentation, objectives and metrics, and team; indeed, without MLTRL it is common for this stage to be erroneously leaped completely, as shown in Fig. 4. An interdisciplinary working group is defined, as we start developing the technology in the context of a larger real-world process—i.e., transitioning the model or algorithm from an isolated solution to a module of a larger application. Just as the ML technology should no longer be owned entirely by ML experts, steps have been taken to share the technology with others in the organization via demos, example scripts, and/or an API; the knowledge and expertise cannot remain within the R&D team, let alone an individual ML developer. Graduation from Level 5 should be difficult, as it signifies the dedication of resources to push this ML technology through productization. This transition is a common challenge in deep-tech, sometimes referred to as “the valley of death” because project managers and decision-makers struggle to allocate resources and align technology roadmaps to effectively move to Levels 6, 7, and onward. MLTRL directly addresses this challenge by stepping through the technology transition or handoff explicitly.

In the left diagram (a subset of the Fig. 1 pipeline, same colors), the arrows show a common development pattern with MLTRL in the industry): projects go back to the ML toolbox to develop new features (dashed line), and frequent, incremental improvements are often a practice of jumping back a couple of levels to Level 7 (which is the main systems integrations stage). At Levels 7 and 8 we stress the need for tests that run use-case-specific critical scenarios and data-slices, which are highlighted by a proper risk-quantification matrix78. Reviews at these Levels commonly catch gaps or oversight in the test and validation scenarios, resulting in frequent cycles back to Level 7 from 8. Cycling back to previous lower levels is not just a late-stage mechanism in MLTRL, but rather “switchbacks” occur throughout the process. Cycling back to Level 7 from 8 for more tests is an example of a review switchback, while the solid line from Level 9 to 7 is an embedded switchback where MLTRL defines certain conditions that require cycling back levels—see more in the “Methods” section and throughout the text. In the right diagram, we show the more common approach in the industry (without using our framework), which skips essential technology transition stages (gray)—ML Engineers push straight through to deployment, ignoring important productization and systems integration factors. This will be discussed in more detail in the “Methods” section.

Level 5 data—For the most part consistent with Level 4. However, considerations need to be taken for scaling of data pipelines: there will soon be more engineers accessing the existing data and adding more, and the data will be getting much more use, including automated testing in later levels. With this scaling can come challenges with data governance. The data pipelines likely do not mirror the structure of the teams or broader organization. This can result in data silos, duplications, unclear responsibilities, and missing control of data over its entire lifecycle. These challenges and several approaches to data governance (planning and control, organizational, and risk-based) are detailed in Janssen et al.8.

Level 5 review—The verification and validation (V&V) measures and steps defined in earlier R&D stages (namely Level 2) must all be completed by now, and the product-driven requirements (and corresponding V&V) are drafted at this stage. We thoroughly review them here and make sure there is stakeholder alignment (at the first possible step of productization, well ahead of deployment).

The main work here is significant software engineering to bring the code up to product-caliber: This code will be deployed to users and thus needs to follow precise specifications, have comprehensive test coverage, well-defined APIs, etc. The resulting ML modules should be robustified towards one or more target use-cases. If those target use-cases call for model explanations, the methods need to be built and validated alongside the ML model, and tested for their efficacy in faithfully interpreting the model’s decisions—crucially, this needs to be in the context of downstream tasks and the end-users, as there is often a gap between ML explainability that serves ML engineers rather than external stakeholders12. Similarly, we need to develop the ML modules with known data challenges in mind, specifically to check the robustness of the model (and broader pipeline) to changes in the data distribution between development and deployment.

The deployment setting(s) should be addressed thoroughly in the product requirements document, as ML serving (or deploying) is an overloaded term that needs careful consideration. First, there are two main types: internal, as APIs for experiments and other usages mainly by data science and ML teams, and external, meaning an ML model that is embedded or consumed within a real application with real users. The serving constraints vary significantly when considering cloud deployment vs on-premise or hybrid, batch or streaming, open-source solution or containerized executable, etc. Even more, the data at deployment may be limited due to compliance, or we may only have access to encrypted data sources, some of which may only be accessible locally—these scenarios may call for advanced ML approaches such as federated learning13 and other privacy-oriented ML14. And depending on the application, an ML model may not be deployable without restrictions; this typically means being embedded in a rules engine workflow where the ML model acts like an advisor that discovers edge cases in rules. These deployment factors are hardly considered in model and algorithm development despite their significant influence on modeling and algorithmic choices; that said, hardware choices typically are considered early on, such as GPU versus edge devices. It is crucial to make these systems decisions at Level 6—not too early that serving scenarios and requirements are uncertain, and not too late that corresponding changes to model or application development risk deployment delays or failures. This marks a key decision for the project lifecycle, as this expensive ML deployment risk is common without MLTRL (see Fig. 4).

Level 6 data—Additional data should be collected and operationalized at this stage towards robustifying the ML models, algorithms, and surrounding components. These include adversarial examples to check local robustness15, semantically equivalent perturbations to check the consistency of the model with respect to domain assumptions16,17, and collecting data from different sources and checking how well the trained model generalizes to them. These considerations are even more vital in the challenging deployment domains mentioned above with limited data access.

Level 6 review—Focus is on the code quality, the set of newly defined product requirements, system SLA and SLO requirements, data pipelines spec, and an AI ethics revisit now that we are closer to a real-world use-case. In particular, regulatory compliance is mandated for this gated review; the data privacy and security laws are changing rapidly, and missteps with compliance can make or break the project.

For integrating the technology into existing production systems, we recommend the working group has a balance of infrastructure engineers and applied AI engineers—this stage of development is vulnerable to latent model assumptions and failure modes, and as such cannot be safely developed solely by software engineers. Important tools for them to build together include:

Tests that run use-case-specific critical scenarios and data-slices—a proper risk-quantification table will highlight these.

A “golden dataset” should be defined to baseline the performance of each model and succession of models—see the computer vision app example in Fig. 5—for use in the continuous integration and deployment (CI/CD) tests.